If you are a student and want to have a discussion with me regarding my papers or how to apply for a PhD program in the US, please email me at achaubey at usc dot edu

For students who wish to join our lab, please check our lab's open positions.

I am a CS PhD student at the Institute for Creative Technologies, University of Southern California, where I am advised by Prof. Mohammad Soleymani at the Intelligent Human Perception Lab. I am a Bronze Medallist from the 2021 batch of Indian Institute of Technology, Roorkee.

During my PhD, I am working on post-training techniques such as preference optimization for multimodal (audio/video/omni) LLMs to improve their social and emotion understanding. I also collaborate on projects related to video generation for social behaviors.

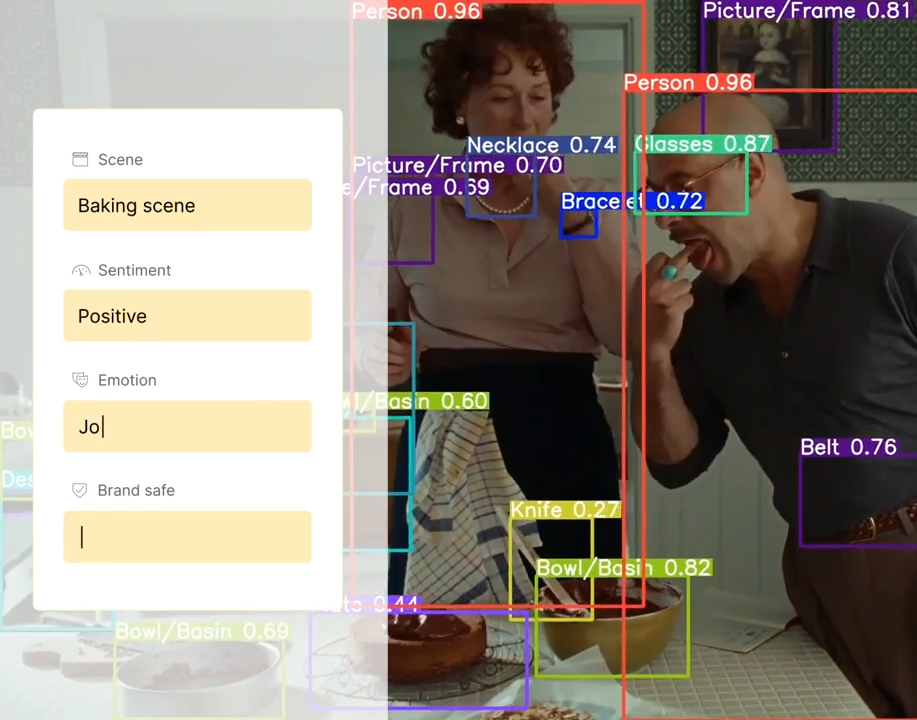

Prior to this, I was a Founding Research Engineer at Anoki AI where I worked on multimodal content understanding and retrieval. I have also worked at LG Ad Solutions on speaker recognition, automatic content recognition using audio and voice cloning. Over the past I have interned at Adobe Research, with Sumit Shekhar, at Vision and AI Lab, IISc. Bengaluru with Prof. R. Venkatesh Babu, and at IIT Roorkee with Prof. R. Balasubramanian

I am looking for Research/Applied Scientist internship positions for Summer 2026 on multimodal LLMs (audio/visual/omni) and video generation. Please reach out if you have open positions.

If you are a student and want to have a discussion with me regarding my papers or how to apply for a PhD program in the US, please email me at achaubey at usc dot edu

For students who wish to join our lab, please check our lab's open positions.

Multimodal LLM Tuning and Post-training, Emotion understanding, Social AI

Ashutosh Chaubey, Jiacheng Pang, Maksim Siniukov, Mohammad Soleymani

Xulang Guan*, Ashutosh Chaubey*, Maksim Siniukov, Belle Hsieh, Zongjian Li, Mohammad Soleymani

Ashutosh Chaubey, Xulang Guan, Mohammad Soleymani

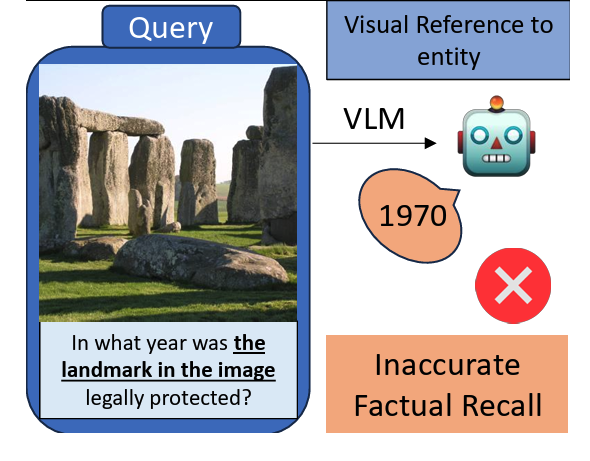

Dhananjay Ashok, Ashutosh Chaubey, Hirona Arai, Jonathan May, Jesse Thomason

Maksim Siniukov*, Di Chang*, Minh Tran, Hongkun Gong, Ashutosh Chaubey, Mohammad Soleymani

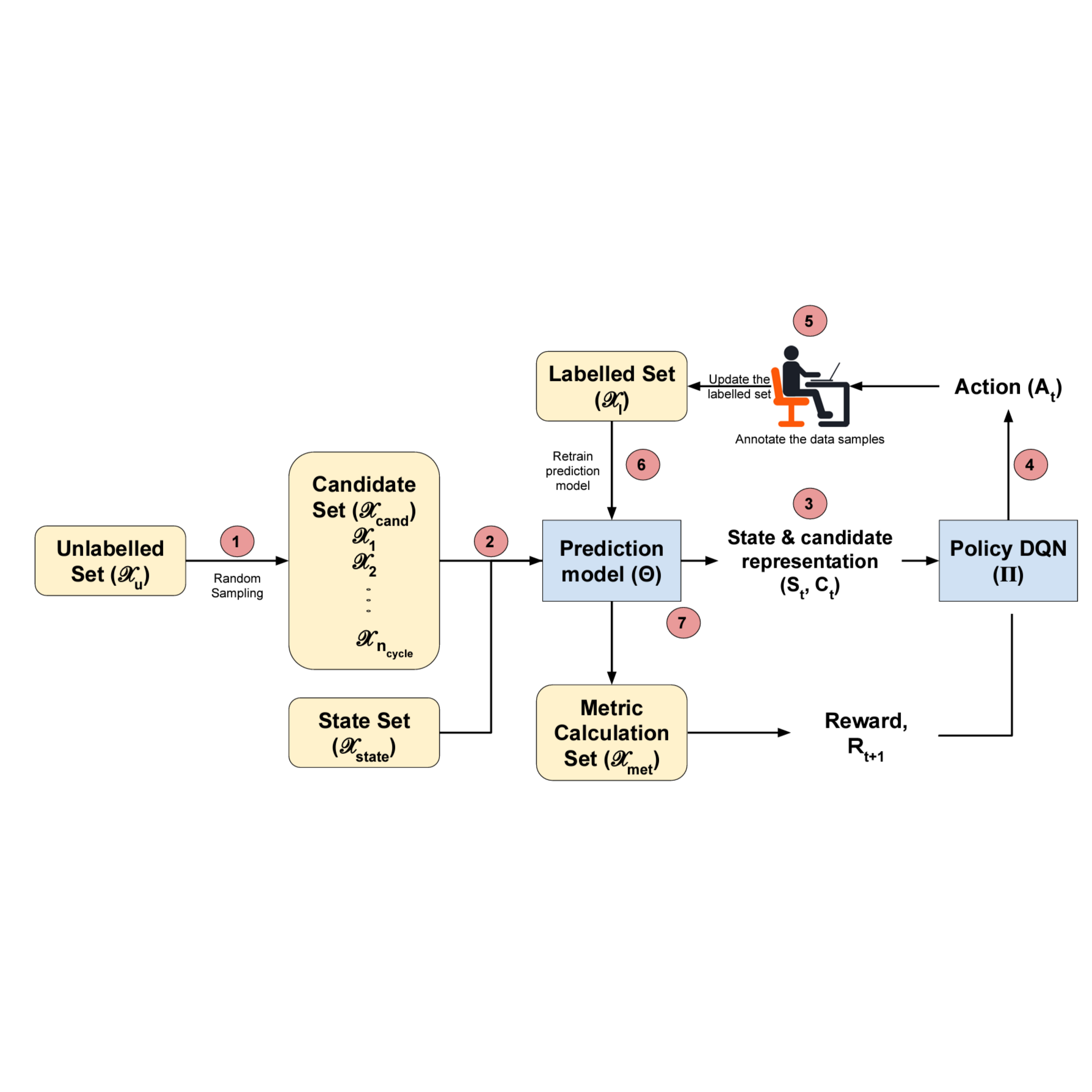

Ashutosh Chaubey, Anoubhav Agarwaal, Sartaki Sinha Roy, Aayush Agrawal, Susmita Ghose

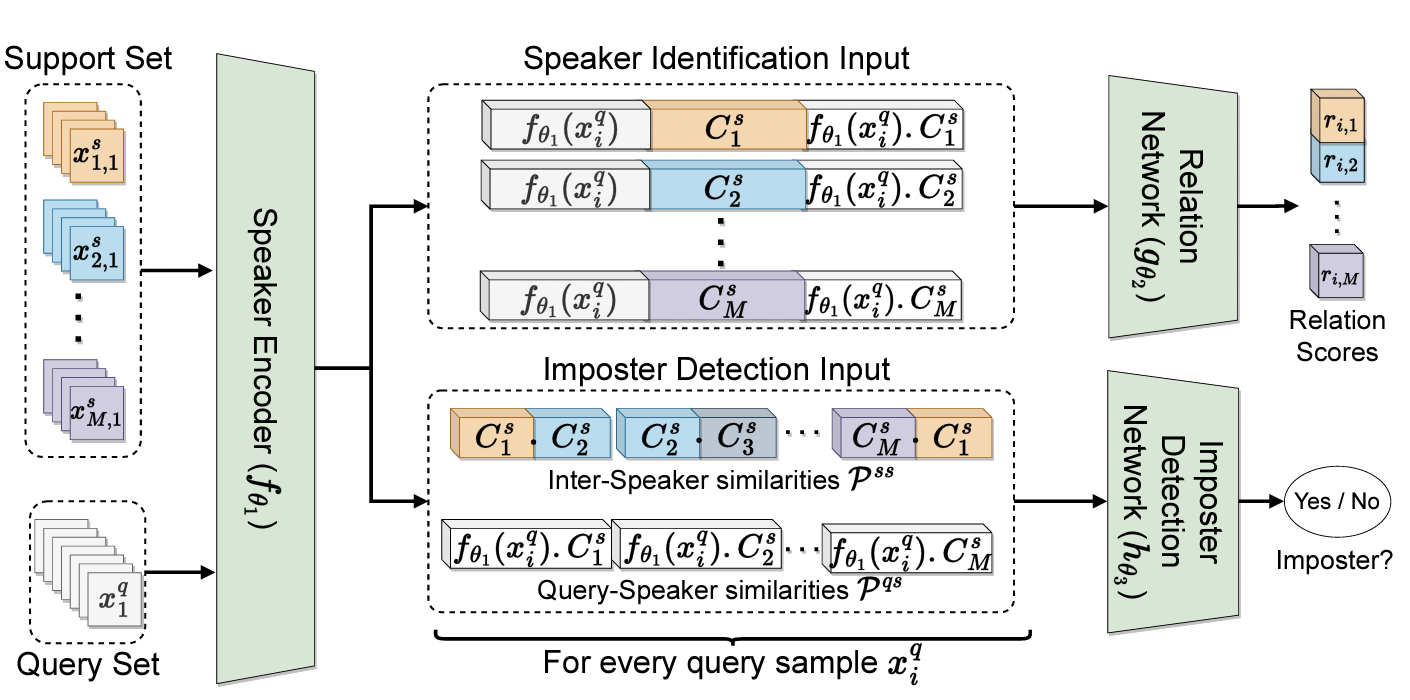

Ashutosh Chaubey, Sparsh Sinha, Susmita Ghose

Ashutosh Chaubey, Sparsh Sinha, Susmita Ghose

Sumit Shekhar, Bhanu Prakash Reddy Guda, Ashutosh Chaubey, Ishan Jindal, Avneet Jain

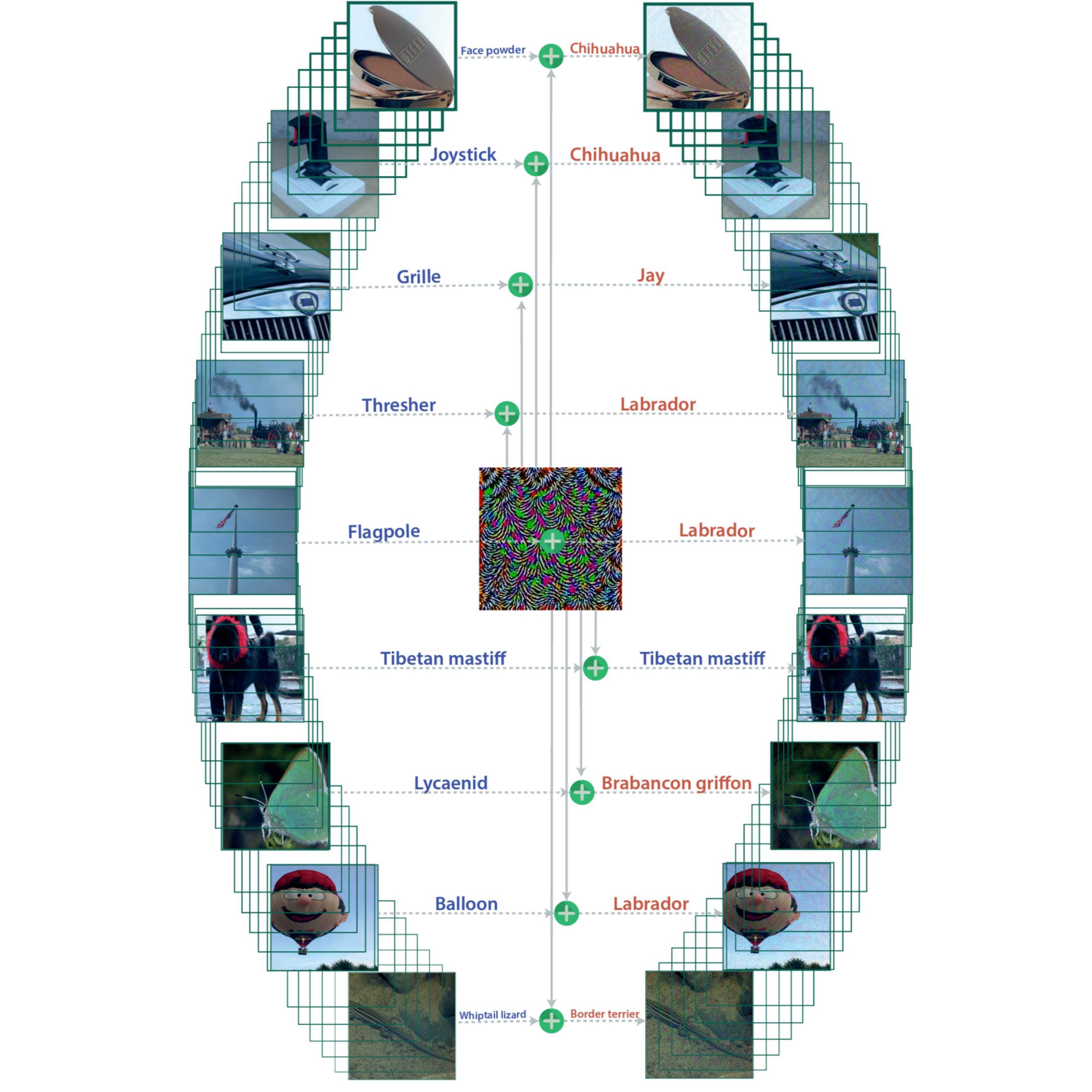

Ashutosh Chaubey*, Nikhil Agrawal*, Kavya Barnwal, Keerat K. Guliani, Pramod Mehta

University of Southern California – PhD, Computer Science (2024 - Present), GPA: 4.0/4.0

Graduate Researcher – Intelligent Human Perception Lab, Institute for Creative Technologies

Indian Institute of Technology Roorkee – BS, Computer Science (2017 - 2021), GPA: 9.718/10

Chair - ACM IIT Roorke Chapter | Co-President - Vision and Language Group